EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USQOS on Nexus 5500 Series Switches

Nexus 5500 QOS provides the per-system class-based traffic control. These feature are:

- Lossless Ethernet: Priority flow control

- Traffic Protection: Bandwidth management

- Configuration Signaling to Endpoints: Part of DCBX.

Here we are not much going to discuss about the Nexus 5500 Hardware Architecture but yes we will discuss how Nexus 5500 manages the QOS and VOQ concepts.

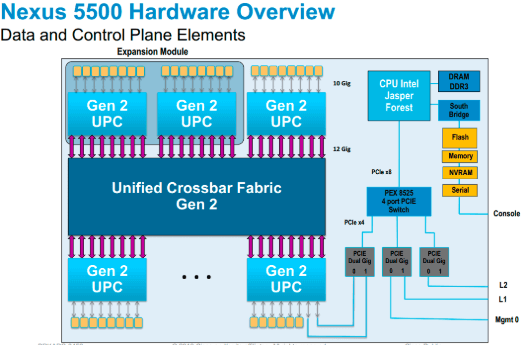

Each Nexus 5500 is composed of following components:

- The Ingress unified port-controller (UPC)

- The Crossbar fabric

- The Egress UPC

Each UPC manages the traffic for group of eight 1/10GE ports. These UPC provides Traffic buffering, arbitration to cross crossbar fabric etc.

In Nexus 5500, all packets are managed by UPC and are never managed by Control Plane CPU. All classification, Marking, Queuing, Policing are done in hardware either on Ingress UPC, Egress UPC or On Crossbar fabric.

Nexus 5500 supports following types of QOS policies:

- qos: It defines (MQC) objects which is used for marking and policing

- network-qos: It defines the various characteristics of network-wide QoS properties (such as used in data center bridging [DCB] networks) and it should be applied consistently on all switches participating in the network

- queuing: it defines MQC objects which is used for queuing and scheduling, and in addition to a limited set of marking objects

Here qos Policies are applied on Ingress interface or crossbar fabric and network-qos policies are applied only on crossbar fabric and queuing policies are applied on ingress , egress interface and crossbar fabric.

Virtual Output Queue:

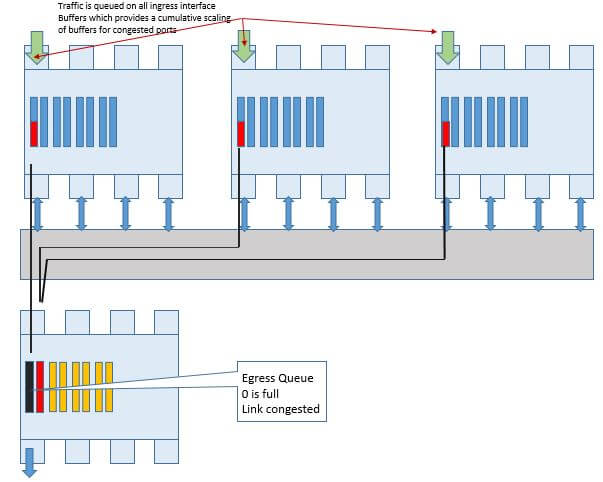

Whenever any nexus 5500 receives the packet and destination interfaces is congested then the nexus 5500 queues the packet at ingress port. In ingress the ingress port has very less buffer say 640KB which is not good enough in traffic congestion in that case the VOQ plays very important role in queuing.

Total queue size available to egress ports is equal to total number of ingress ports multiply queue depth per port.

Let’s say if you have 10 ingress ports and each has 640KB buffer than the instead on relaying the buffer size available to egress , these ten ingress ports increases the buffer space for uplink by 10*640KB = 6.4MB of buffer space to the congested port.

Let’s say there are three ingress ports sending packets to single egress port which is congested. Packets are queued at ingress port buffer until egress queues are somehow empty to process next packet.

Each Nexus 5500 ingress port has set of eight VOQ for each egress ports – one for each class of service. Let’s say if server is connected to Eth1/1 port and there are three 1 egress port 1/46, eth1/47, eth1/48 then three VOQ of 8 queue for eth1/1 ingress port will be used for three egress ports. So in total NX-OS supports 1024 ingress VOQ on each and every ingress ports ( 8Classes * 128 Destination) of switch model is 5596 then 96 ports + 2 optional layer 3 daughter cards each having 16 connection to cross bar fabric so 96+16+16 = 128.

Central arbiter manages the queues on each port which further provides the access to cross the crossbar fabric.

Here Queue 0 on egress port is congested while other 7 queue is still not congested then in this case the central arbiter will notify the ingress port to queue the packet for Queue 0 till congestion is cleared on egress while packet on rest queue will keep on flowing as it is.

LEAVE A COMMENT

Please login here to comment.