EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USNexus 5500 & vPC

Cisco Nexus 5000 series switches consist of following models based on generation:

Generation 1: Models are 5000 switch which includes 5010 and 5020 switches which are now End of sale.

Generation: 2: Models include 5500 switches and are currently being deployed as datacenter access layer switches along with Cisco Fex Extender which we will be discussing a little later.

The Generation 2 5500 switches model typically includes:

- Nexus 5548 P

- Nexus 5548UP

- Nexus 5596UP

- Nexus 5596T

These has following capability:

- Up to 1152 ports in a single management domain using Cisco FEX architecture

- Up to 96 unified ports

- Nexus 5500 switch provide Layer 3 capacity by inserting separate Layer 3 module in its expansion slot.

Below diagram will provide some highlights about first and second generation Datacenter access switches:

Out of above model, Nexus 5548 is end of sale now, the switch which has UP attached along with model number is called as unified ports that mean its can support both Ethernet as well as Storage (FC) and FCOE traffic.

Next Generation Datacenter 5600 Switch:

- Nexus 5600 model switches are switches which are deployed to meet the customer Virtualized environment and cloud deployments.

- They offer 2304 ports on single management domain with Cisco FEX.

- Capable of FC, FCOE and Ethernet traffic

- Provides support for VXLAN traffic and deployment.

- Provides the Integrated Layer 3 capability

- It supports Gigabit Ethernet, 10 Gigabit Ethernet, 40 Gigabit Ethernet, and 100 Gigabit Ethernet; native Fibre Channel; and Fibre Channel over Ethernet.

Models includes in this portfolios are:

- Cisco Nexus 56128P Switch

- Cisco Nexus 5696Q Switch

- Cisco Nexus 5672UP Switch

- Cisco Nexus 5672UP-16G Switch

- Cisco Nexus 5648Q Switch

- Cisco Nexus 5624Q Switch

Nexus 5500 models switch Architecture

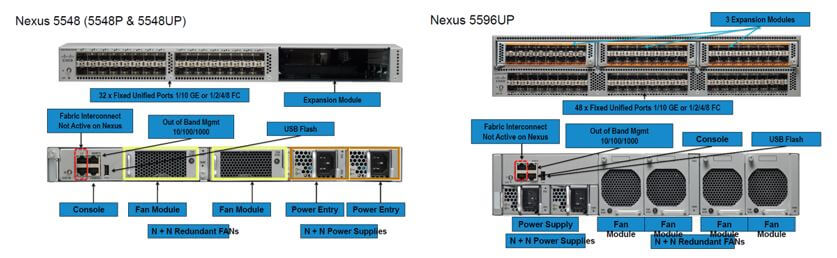

Below is the hardware description of Nexus 5500 switch for Nexus 5548P, 5548UP and 5596UP models. The below figure its self-defines the hardware capabilities of the Nexus 5500 Series switch and each switch has one or three expansion slots which is used to install additional expansion modules if more number of ports are required in future.

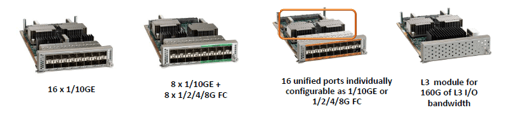

Below is the pictorial view of the types of Expansion slots available for Nexus 5500 Switch Models:

Below is the small description of Nexus 5500 hardware Overview:

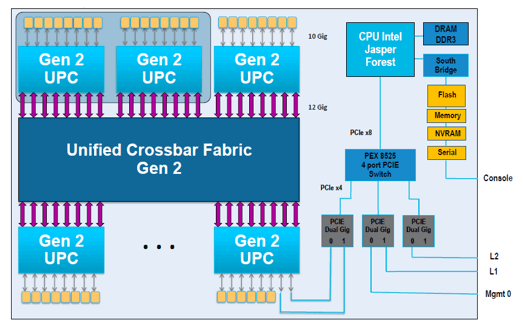

Nexus 5000/5500 uses the Distributed Forwarding Architecture, where UPC represents the Unified port Controller which connects 8 physical ports and provides the 8 virtual Queue per port for QOS and queuing purpose. All port to port traffic passes through this UPC only. Finally this UPC is connected to Crossbar fabric which inter connects all UPC in the Nexus 5500 or 5000 series switches.

LEAVE A COMMENT

Please login here to comment.