EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USAmazon RedShift

Amazon RedShift:

It is the fast, petabyte-scale data warehouse service which is designed for OLAP scenarios and is mostly used in high performance analysis and reporting of large datasets.

It gives you fast querying capabilities over structured data using standard SQL commands. It can connect well with ODBC and JDBC which further helps to integrates with various data loading, reporting, datamining, and analytics tool.

Clusters and Nodes:

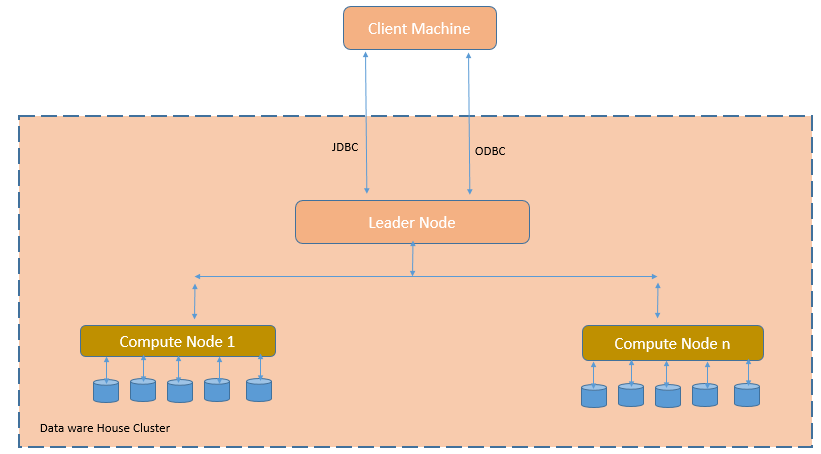

A cluster is composed of a leader node and one or more compute nodes. A clients interacts directly with leader nodes and compute nodes are transparent to external applications.

Amazon RedShifts supports Six different nodes types and each can be of different CPU, memory and Storage and which are further grouped in to Dense compute or Dense Storage class.

A Dense Compute nodes types supports clusters up to 326TB using fast SSD where as Dense storage nodes supports clusters up to 2PB using large magnetic disks.

Following figure shows component of Amazon RedShift Dataware house cluster.

Each cluster contains one or more databases. User data for each table is distributed across the compute nodes. Your application or SQL client communicates with Amazon Redshift using standard JDBC or ODBC connections with the leader node, which in turn coordinates query execution with the compute nodes. Your application does not interact directly with the compute nodes.

LEAVE A COMMENT

Please login here to comment.