EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USIntroduction & vSphare Requirements For NSX

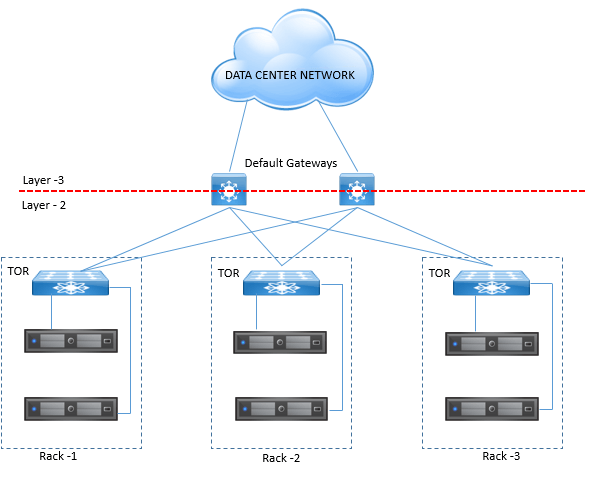

To understand the actual benefits of NSX let’s see how traditional DC looks like

Some time we also provide the Collapsed Access layer topology, where TOR becomes the Layer 3 switch by providing the Layer 2 Ethernet connection to workloads and also provides the default gateway services to them.

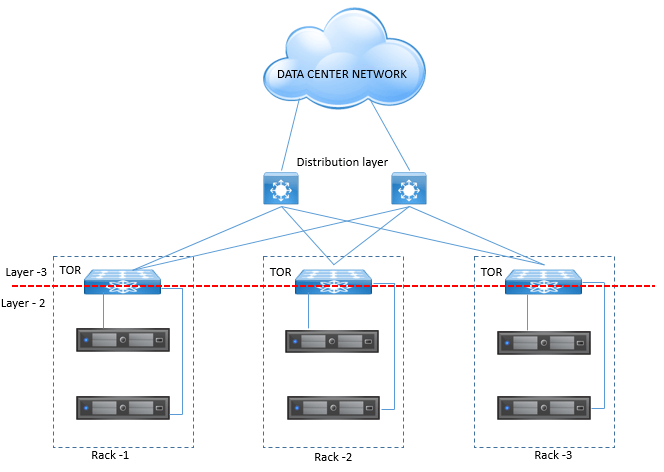

All ports configured as layer 2 have all STP functions disabled and is set to forwarding states.

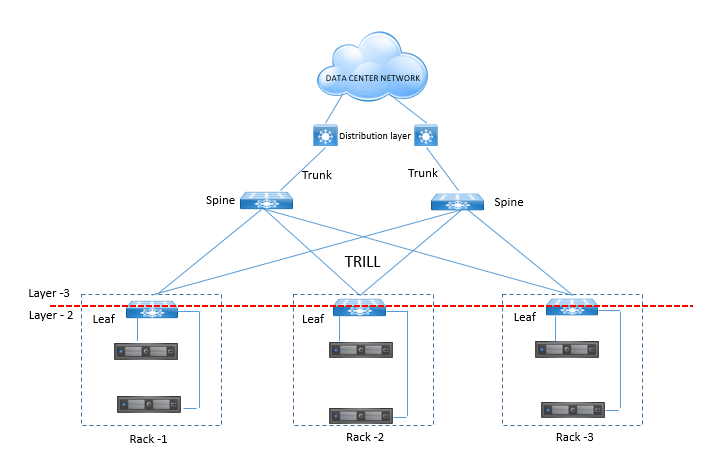

Another designers also sometimes designers DC network as Spine and Leaf Architecture to provide the equal cost multipath supports to DC workloads and how ever removing all complexities of STP .

Now after going from series of design changes, VMWARE provided the NSX solution which virtualizes access layer. Now to virtualize access layer there are two requirements

- IPV4 connectivity connectivity among ESXi servers.

- JUMBO frame supports as using NXS, the size of ethernet frame increases.

Below datacenter diagram shows how NSX has introduced the Access layer, where TOR switches are L3 Switch and are connected to distribution layer switches via L3 Links. NSX allows layer 2 domains to extend across multiple racks separated by Layer 3 boundaries.

LEAVE A COMMENT

Please login here to comment.