EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USDistributed Logical Firewall

Distributed firewall (DFW) is s replicated among multiple hosts the way the vSphere distributed switch or logical router are replicated.

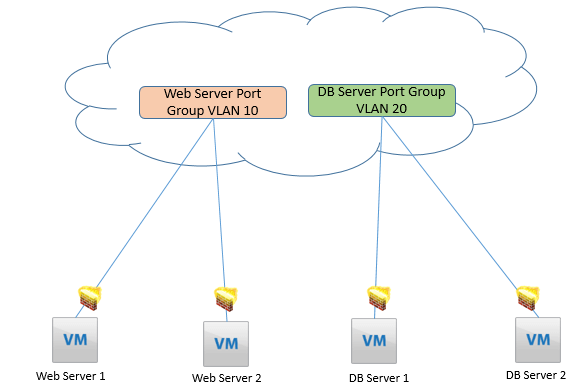

With the DFW, it is possible to deploy any multitier application in the same Layer 2 broadcast domain for all tiers, and have the same subnet and the same default gateway. With DFW, there are no compromises in the diameter of the vMotion range, and if you deploy the application in a logical switch, you don’t need to worry about STP. Security between any two VMs in the same Layer 2 broadcast domain can also be provided.

The DFW provides the Layer 2, Layer 3, and Layer 4 stateful security to all virtual workloads running in NSX-prepared ESXi hosts, regardless of the virtual switch they connect to. A VM can be connected to a logical switch, a dvPortgroup, or a standard portgroup. If two VMs connect to the same standard portgroup, you can apply whatever security policy you want between the VMs, and the DFW enforces it.

The DFW kernel module connects itself in slot 2 of the IOChain. This means the DFW will enforce Firewall rules regardless of how the virtual machine connects to the network.

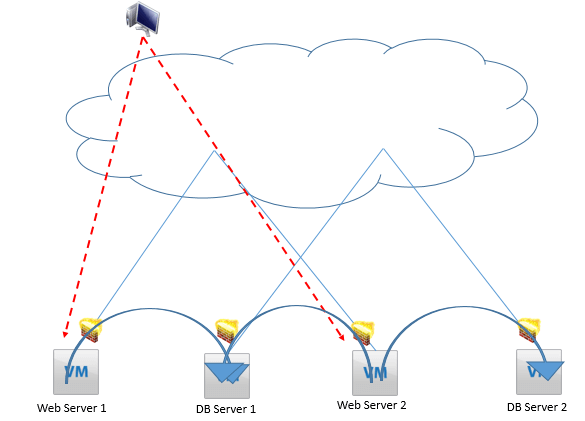

Below shows our multitier application with the only allowed traffic being the one from the users to the web server and the web servers to the database servers. The DFW makes it possible for all tiers in a multitier application to reside in the same Layer 2 broadcast domain and the same subnet.

The DFW is composed of firewall rules with source and destination addresses and Ethertypes or Layer 4 protocols, which are then applied to the individual vNIC of a virtual machine. The same DFW rule can be applied to a single vNIC in a VM, all vNICs in the same VM, or the vNICs of multiple VMs. In case the DFW fails, it fails close, blocking all traffic for the impacted vNIC.

LEAVE A COMMENT

Please login here to comment.