EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USLogical Router

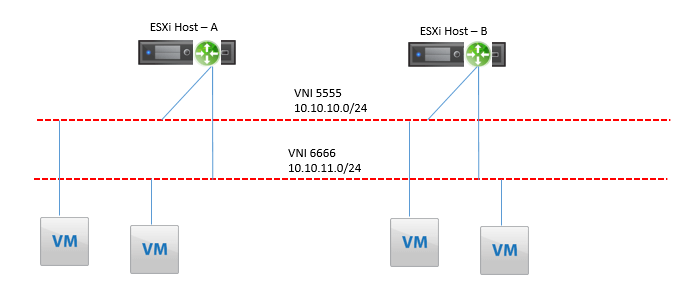

NSX Logical router or logical router whatever we say is a router whose data plane runs in ESXi host kernel. Below figure states that a logical router in ESXi host is connected to two logical Switches or VNI (VNI 5555 and VNI 6666) and each ESXi host has two powered ON VM. Both ESXi host have same instance of logical router.

A single ESXi host can run 100 different logical router instances and each instance of logical router in ESXi host are independent and separate from each other in running in same ESXi host.

In NSX domain about 1200 local instance of logical router can run and each logical router can support 1000 logical interface (LIFs).

NSX Controller layer 3 master assigns the logical router to NSX controller to manage its control plane and each NSX Controller who is responsible for logical router keeps the copy of master routing table for that logical router. Now this NSX controller who is responsible for Logical router will push the copy of routing table to each ESXi host where logical router instance runs.

If there is any change in routing table, the NSX Controller who is responsible for that logical router, pushes the updated routing table to all its related ESXi hosts running logical router instance.

Each Logical router has two types of interfaces:

Internal LIF: This interface is used to provide connection to Logical switches where VM are connected. There will be no Layer 3 Control plane traffic like OSPF, BGP hellos seen on this interface. When LIF is connected to logical switch it is also termed as VXLAN LIF or when LIF is connected to VLN backed dvPortgroup, LIF is also called as VLAN LIF.

A VLAN LIF can only support up to 0 to 4095 VLAN

External LIF: This interface is used to provide connectivity to NSX Edge Service gateway so that it can provide connectivity to logical switches as well as VM connected on it. In this interface Layer 3 Control plane traffic like OSPF, BGP hellos are seen.

Types of Logical Router

There are two types of logical routers:

- Distributed logical router

- Universal logical router

Distributed Logical Router: A logical router that connects to global logical switches is called as distributed logical router.

Universal Logical Router: A logical router that connects to Universal logical switches is called as Universal logical router. A ULR does not supports VLAN LIFs, and only supports VXLAN LIFs.

vMAC & pMAC:

There are two types of MAC used in logical router. Each copies of logical router in ESXi host gets at least two MAC address, the first one is called vMAC and is same for all logical router copies and its standard value is 02:50:56:56:44:52.

The second MAC address is called as pMAC address which is assigned to each logical router instance per dvUplink and is based on teaming policy which was selected during host configuration. pMAC is generated by each ESXi host independently with VMWare unique ID of 00:50:56.

When logical router send the ARP request or replies to ARP request it responds with vMAC. A logical router for any egress traffic from its VXLAN LIFs, it uses vMAC. And for all other traffic including traffic over VLAN LIFs logical router uses pMAC.

Assume you have a VM connected to a logical switch, a logical router with an internal LIF in the same logical switch, and the VM has a default gateway of the LIF’s IP. When the VM sends an ARP request for its default gateway’s MAC, the logical router in the same ESXi host where the VM is running sends back an ARP reply with the vMAC.

In this case, when the virtual machine vMotions, the MAC address of the VMs’ default gateway will be the same at the destination host because it is the vMAC. The same is true if the VM is connected to a universal logical switch with a ULR for its default gateway.

Logical Router Control VM:

As soon as logical router instance is created, one virtual appliance said to be as Logical Router Control VM is deployed.

Control VM is used to handle dynamic component of routers control plane that is making routing table adjacencies, creating forwarding database, routing table, etc.

If the environment has ULR, multiple independent control VM are deployed, and one per NSX Manager in the Cross VCenter domain. One Control VM maintains the routing table , its copy is sent to each ESXi host over which logical router instance is running.

Control VM forwards the dynamic routing table to the NSX Controller, which would merge it with its copy of the static routing table to create the master routing table. A copy of the master routing table is forwarded by the NSX Controller to the ESXi hosts that are running a copy of the logical router instance. Future dynamic routing table updates follow the same communication path.

LEAVE A COMMENT

Please login here to comment.