EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USOpenStack Networking Services

Networking in OpenStack is designed in such a way that each Tenants can create their own network, manage multiple networks, and connect network networks, access to external network and also deploy other networking services.

In Open stack, basic networking was provided by Nova but all other advance feature of networking features are provided by OpenStack Project called Neutrons.

Here we will in details about Neutron projects.

Neutron Architecture:

Neutron project in OpenStack enables tenants to create their own network and configure network elements like subnets, routers, firewall, load balancer, and ports.

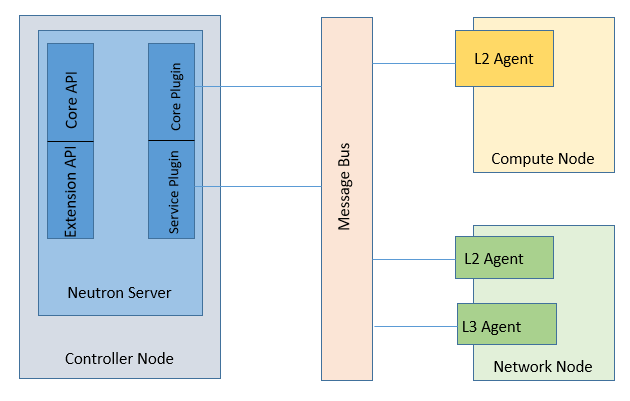

Neutron Server contains API server which receives the networking service request and this API server is mostly configured on controller node. Neutron is based on plugin-based architecture which provides additional networking requirements. These plugin resides on controller node where it can implement orchestration of resources directly by interacting with devices or use agents to control the resources.

As soon as API server receives the networking request, it passes the request to associated plugins to further work on.

Agents are deployed on network or compute nodes, whereas the networking node provides resources to implement services like routing, firewall, load balancing, and VPNS.

Hardware Vendors also provides well defined API to implement their own plugin to support OpenStack API server request.

Neutron Plugins: These plugins implement orchestration of resources directly by interacting with devices or use agents to control the resources. There are two types of Neutron plugins.

Core Plugin: This plugin provides the layer 2 connectivity for virtual machine as well as network elements connecting to network. As soon as there is request for creating new virtual network or new ports creation, API server call to Core Plugin to proceed with.

Core Plugin has following neutron resources:

- Networks: represents layer 2 Domain.

- Ports: Representing endpoints on the above virtual network.

- Subnets: Contains Layer 3 address along with gateway defined.

Service Plugin: Service plugin is used to configure higher networking services like routing , Security firewall, Load Balancer , and VPN services.

As example to understand , service plugin create the virtual router that provide connectivity between different network , another example is it also creates the floating IP that provides NAT function to expose the Internal VM to External World.

Agents: Agents are deployed on network and compute nodes. These agents can talk to Neutron server over Message bus. Neutron provide various types of agents to implement virtual networking services like Layer-2 connectivity, DHCP, Routers etc. Some of the Neutron agents are as follows:

- DHCP Agents: to Provide DHCP services and is implemented on network node.

- L3 Agents: Implements routing, and also NAT services

- VON Agents: Implements VPN service and is installed on Network Nodes.

- L2 Agents: Resides on Compute Node and network node, and is used to connect VM and other networking devices like Virtual router to L2 network. It also interacts to Core Plugin to receive the networking information / configuration for VM.

LEAVE A COMMENT

Please login here to comment.